Key points:

Revival in demand for PCs.

Rollout of the “AI PC”.

The cloud and edge computing are both starved for compute power.

All roads lead to chipmakers.

From the “worth watching” department...

It may not be as exciting as artificial intelligence itself, but a derivative play may very well be the good ole’ personal computer.

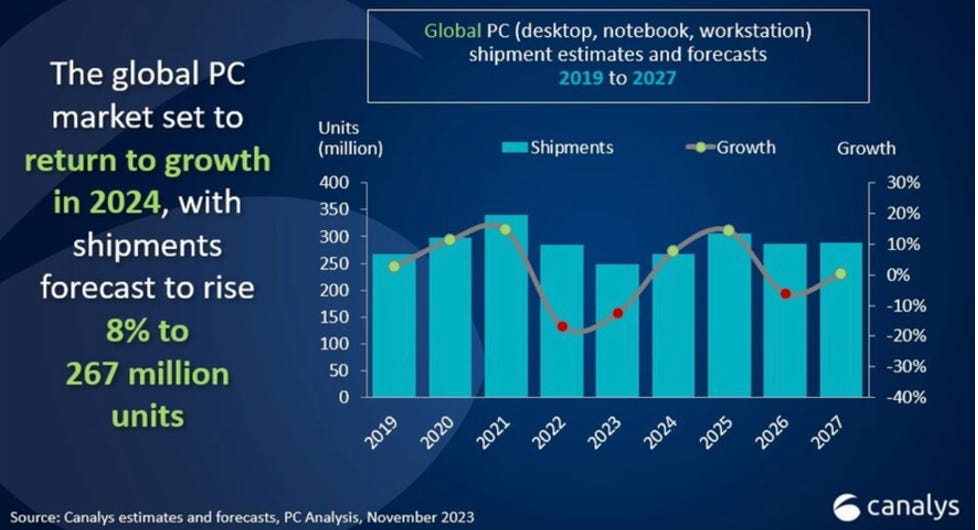

If you haven’t noticed, the industry has been absolutely pummeled over the past two years, the longest run of negative growth ever.

“It’s ripe for a refresh,” is the way Dell Technologies COO Jeffrey Clarke put it on his company’s earnings call in November.

He’s talking his book, of course, and this isn’t the first time he has said it, only to have gotten ahead of himself...

But this time it really does seem different.

The Setup...

There are three things going on, two of which on their own would cause sales to pop higher:

The normal upgrade cycle.

The coming “end of life” for Microsoft’s Window’s 10, which is likely to force a bunch of holdouts to new machines.

As Clarke explains...

There’ll be 300 million PCs turning four years old next year. That's typically a tipping point for upgrading in commercial. And most of those are notebooks. As everybody was working remote, the notebook mix went up, 300 million PCs that year. They're aging in time to refresh.

But there’s refresh, and rebound....

What’s Changed...

The real story – assuming it’s all it’s cracked up to be – is the third thing, which is something much bigger than the other two. As one friend who is better steeped in tech than many explains…

For the first time in a decade PCs are underpowered.

Enter the AI PC, a term seemingly coined by Intel CEO Pat Gelsinger on the company’s earnings call in July....

He went so far as it call the AI PC “a critical inflection point for the PC market over the coming years that will rival the importance of Centrino and WiFi in the early 2000s.” Centrino was Intel’s chip that helped drive the mobile computing market, and it claims its new Meteor Lake chip, in development, is the second coming of Centrino.

He went on to say...

I'd like you to harken back to original Wi-Fi and the Wi-Fi specs. And I was involved in helping to create Wi-Fi. We emerged in 1997, and we sort of went through like give, six fallow years. And then in 2003, Intel launched the first-gen Centrino platform. And it started to drive Wi-Fi at scale, and it gave rise to access points and hardware and comms and new applications. And it initiated a virtual application cycle at the time.

He added...

We see the AI PC as a sea change moment in tech innovation.

Advanced Micro Device’s Lisa Su, whose Ryzen chips arguably is a step ahead of Intel’s Meteor Lake, mentioned something similar on her earning’s call in October, when she said...

What I'm most excited about in PCs is actually the AI PC. I think the AI PC opportunity is an opportunity to redefine what PCs are in terms of productivity tool and really sort of operating on sort of user data. And so I think we're at the beginning of a wave there.

She doubled down on it at AMD’s “Advancing AI Event” last week, when she said...

I really believe that we're actually at the beginning of this AI PC journey, and it's something that is really going to change the way we think about productivity at a personal level.

Similar comments came from Luca Rossi, who runs the PC segment at Lenovo, on Lenovo’s earnings call....

So AI PC will be an inflection point for the entire PC industry. And we believe it will be driving a significant replacement cycle that is valued for commercial and consumer segments. Today, AI is already present in our devices with our own IP. But what we are talking now for the future AI PC is a new class of devices...

Ditto from Alexander Cho, who runs HP Inc’s PC business....

The AI opportunity we have in front of us is going to be an accelerator for our category even beyond this. Current industry estimates put AI PC penetration at an estimated 40% to 50% and with estimated higher ASPs ranging from 5% to 10%. This alone would double the current category growth through 2026. And we are only at the beginning of this journey.

If there’s a catch, it’s that the AI PC may not grow the PC market, but that doesn’t really matter. As Dell’s Clarke says…

What I believe is you don't want to be a PC user that doesn't have an AI-enabled chip in it. You're going to be next to people that have one, and yours doesn't, and it will perform differently. It won't be able to take advantage of some of the new exciting workloads, whether that's new forms of search, new forms of security, new forms of interacting with your PC itself, the ability to put some form of assistant around you.

And that’s it… that’s what really matters.

Just More Hype?

The bigger question is whether this is like so much else in Silicon Valley... are PCs about to be cool again, or is this merely more hope sprinkled with hype? Some analysts point to the lack of a “killer app” to spark an upgrade cycle...

And the whole concept of an “AI PC” has multiple analysts at investment conferences and on earnings calls specifically asking what it really means.

Quite a few suggest it’s really little more than rebranding of gaming PCs.

But as my tech friend puts it...

I don't really care what it gets called, but people are slow to realize how underpowered PCs/Macs are for evolving needs at the edge.

He adds...

Like I said, the AI PC, for me, is just the idea that edge computing needs more power to do local large language models. Call it what you want.

And such PCs, by definition, will look like gaming machines, given the latter's reliance on high-end GPUs. That's not very surprising.

To that point, the research firm Canalys is out with a study that says the global PC market “is on a recovery path and set to return to 2019 shipment levels by next year.” But more importantly, AI PCs should rise to around 19% of the PC market next year. (Here’s a deeper take by them.) and this is before they hit the mainstream.

Just today, as this story start to gain traction, an analyst at Evercore ISI said something similar.

How to Play It

Where this gets interesting...

Regardless of whether it expands the market, new demand alone may very well spark some interesting opportunities.

PCs are just a sliver of the AI story, and likely the most overlooked because they’re so mundane, but could clearly be icing to the ongoing AI story.

Without question the component makers, as always, are the way to play it. (I’d say the PC makers, too, but Dell has already had an extraordinary run, thanks to its server business. And HP, which was recommended today by Evercore, is weighed down by printers.)

Which leaves chips...

And if you believe, as some do, that we’re headed into an extended bull market in chip stocks, with edge computing and cloud computing starved for compute power, there are two options on the PC side: AMD and Intel.

AMD is already well-loved and respected.

By contrast, Intel is so out of favor that any improvement will likely surprise investors. Few companies in legacy tech have lower credibility. The company, in the midst of an attempted turnaround, will hold an “AI Everywhere” event this Thursday to lay out its case.

But perhaps the most interesting play would be the likes of memory maker Micron. After all, as is already the case with servers, AI PCs will require much more memory. Late last year, awash in inventory, Micron cut production... and went on to write off nearly $1 billion in existing inventory.

Current production is so tight that it won’t take much of a rebound in demand to spark extraordinary earnings leverage as the industry retools.

Here’s the thing...

Right now, the PC story is early, clearly taking a backseat to servers. But that’s perhaps the most important point of all: In investing, especially with tech, it’s okay to be early, not okay to be late.

If you like this, please don’t be shy about clicking the heart below. And if you disagree or would like to join the conversation, civil comments are welcome.

DISCLAIMER: This is solely my opinion based on my observations and interpretations of events, based on published facts and filings, and should not be construed as personal investment advice. (Because it isn’t!)

Feel free to contact me at herbgreenberg@substack.com. You can follow me on Threads @herbgreenberg.

I think it's still something of an open question whether or not there's a material advantage to running LLMs locally, but my initial thought here would be no for a few reasons.

First, it's a computationally- and data-intensive computation with utterly trivial network requirements. The query/response is simple plain-text, with the data requirements being the model itself and whatever supporting data may be desirable to flesh out the results or enable further computation. Why shell out top-dollar to compete with tech company wallets for constrained hardware supply when you can pay a subscription fee? Other than the privacy of your question/response, you're not gaining much here and paying a lot of money for it.

Second, running LLM computations on the edge means moving the models themselves to the edge. We're still in the early days, so the models/weights are quite valuable and expensive to produce. If there's very little practical incentive to run the models locally to begin with (see above), why risk something taking the model and running when you can instead just host the model in the cloud?

Third, we're still largely in the "hope" phase of AI/LLMs. There's a lot of hope that this will generate real economic return commensurate with the cost, but I'm not sure to what extent this return has been realized. In my experience it's a net-productivity improvement, but for newer or more junior people I can almost see that reversing since they're not in as good of a position to judge when they're getting a bad answer.

TLDR, I think we're still in the "hope" phase of the technology itself, with a lot of hype and still a mixed bag on real-world return. Added to that, the incentives to push this compute to the edge seems weak at best and likely there's more incentive to keep it off the edge for a while from a data-value perspective. There might be a time for this trade, but I think you're early.

AI is like the oh boy moment, I can crunch a spreadsheet on my PC. Did nothing for productivity. And costs are a lot lower, considering that first computer might have cost you a grand in 1990's dollars. AI is going to start a data stampede. Ask Bing a question and he goes to the same sources you already knew. Its' faster (and less critical). Ultimately are you better at picking stocks (or race horses - James Quinn wrote a book on that). So if you thought information overload was a problem, get ready. Bing will help you sort through the data? (see lack of critical parsing of sources), same old garbage in garbage out admonitions. And Bill Gates will get rich all over again.